(This post is modified from a version that ran in RT Insights Oct 13, 2022.) Quine streaming graph was built to analyze event streams in real time and drive event pipelines. Now our users are coupling them with digital twins and asset graphs to create accurate, up-to-the-second views of their infrastructure.

The potential of real-time digital twins

When things go wrong, our instinct is to retrace our process to pinpoint the problem. This process can be slow and frustrating. So, imagine if AI could step in and flag the misstep with data in real time. What if you could pinpoint the exact place — a blockage in a manufacturing process, a small interruption in a logistics chain that could bring deliveries to a halt or the failure of a piece of critical cloud infrastructure, for example?

What if you could combine real-time alerting and diagnostics with your digital twin? As our world becomes increasingly connected, digital twins are being used to abstract and model almost everything to improve business operations, reduce risk, and enhance decision-making for better outcomes. They provide greater context to challenges by creating clear relationships and streamlining workflows as a virtual representation of the real world, including physical objects, processes, relationships, and behaviors.

Even though they serve a valuable function as part of the enterprise technology toolkit, digital twins are not a technology per se but a specification for the structure and use of real-time data. But this concept is still relatively new, and as data is moving rapidly, how do we maximize the outcomes and role of digital twins?

Seeing digital doubles: An introduction

IBM defines digital twins as “a virtual representation of an object or system that spans its lifecycle, is updated from real-time data, and uses simulation, machine learning, and reasoning to help decision-making.” In short, they can connect digital and physical items through data.

According to McKinsey, “by 2025, smart workflows and seamless interactions among humans and machines will likely be as standard as the corporate balance sheet, and most employees will use data to optimize nearly every aspect of their work.” Realistically, that’s only a couple of years away, meaning we have a short time to get this right. Currently, digital twins are helping revolutionize engineering, security, eCommerce, supply chain issues, and manufacturing to ensure better outcomes across industries.

Embrace and enhance

Until recently, digital twins were used to simulate real-world processes rather than interact with the world in real time. Either synthetically generated or previously captured data was run (and rerun) in controlled scenarios. As a design and diagnostic aid in product lifecycle management, digital twins have proven enormously helpful (think NASA engineers during the Apollo 13 mission for the early use of a twin).

Digital twins for industrial processes.

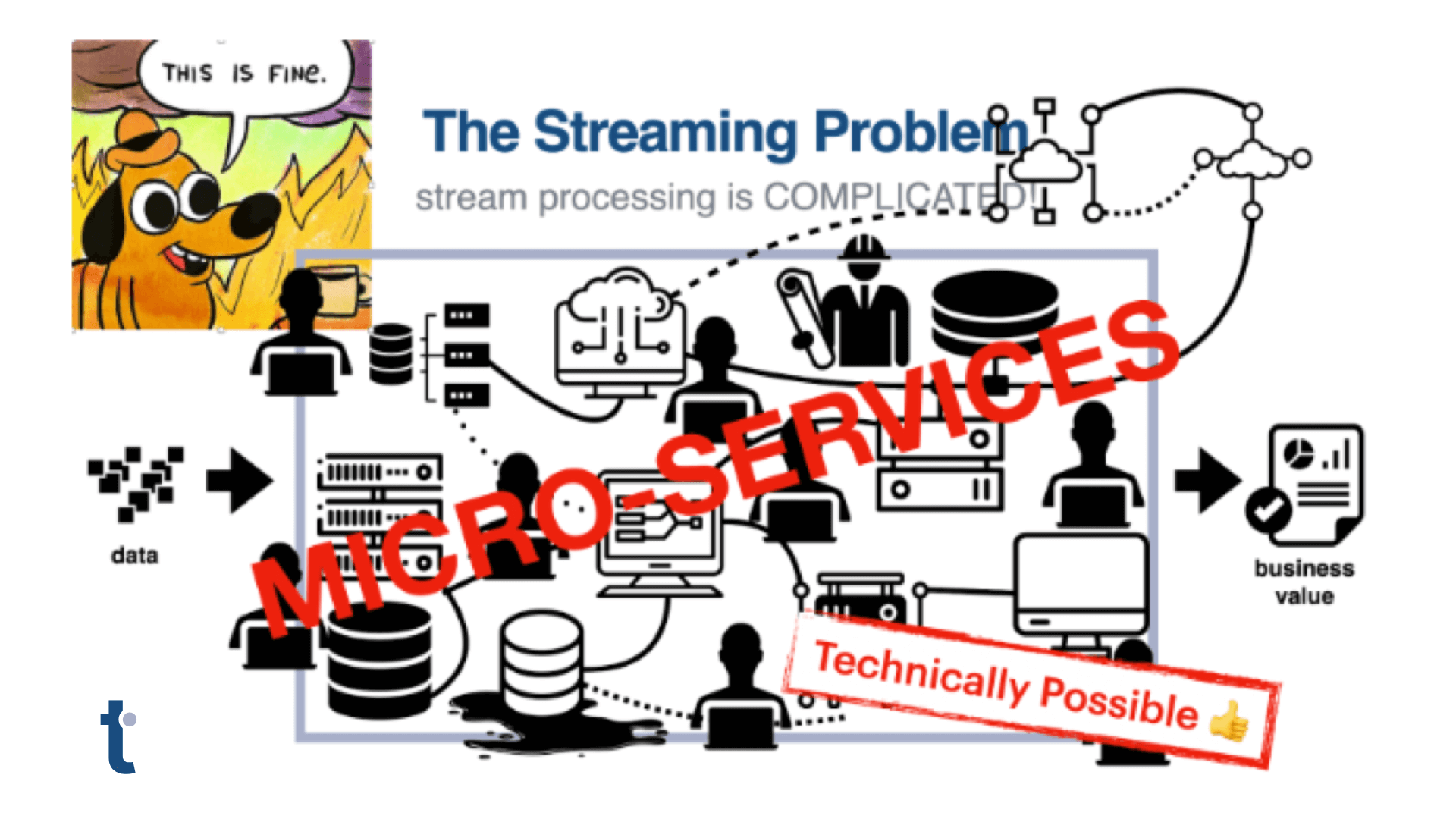

But as enterprises increasingly feel the pressure to replace offline batch processing of event data with real-time event processing, digital twins will need to go real time to remain relevant and valuable. This means moving the digital twin out of legacy databases, and in particular, graph databases, and into systems capable of processing potentially vast amounts of data, usually arriving via data pipeline software like Apache Kafka or Spark, the instant it arrives.

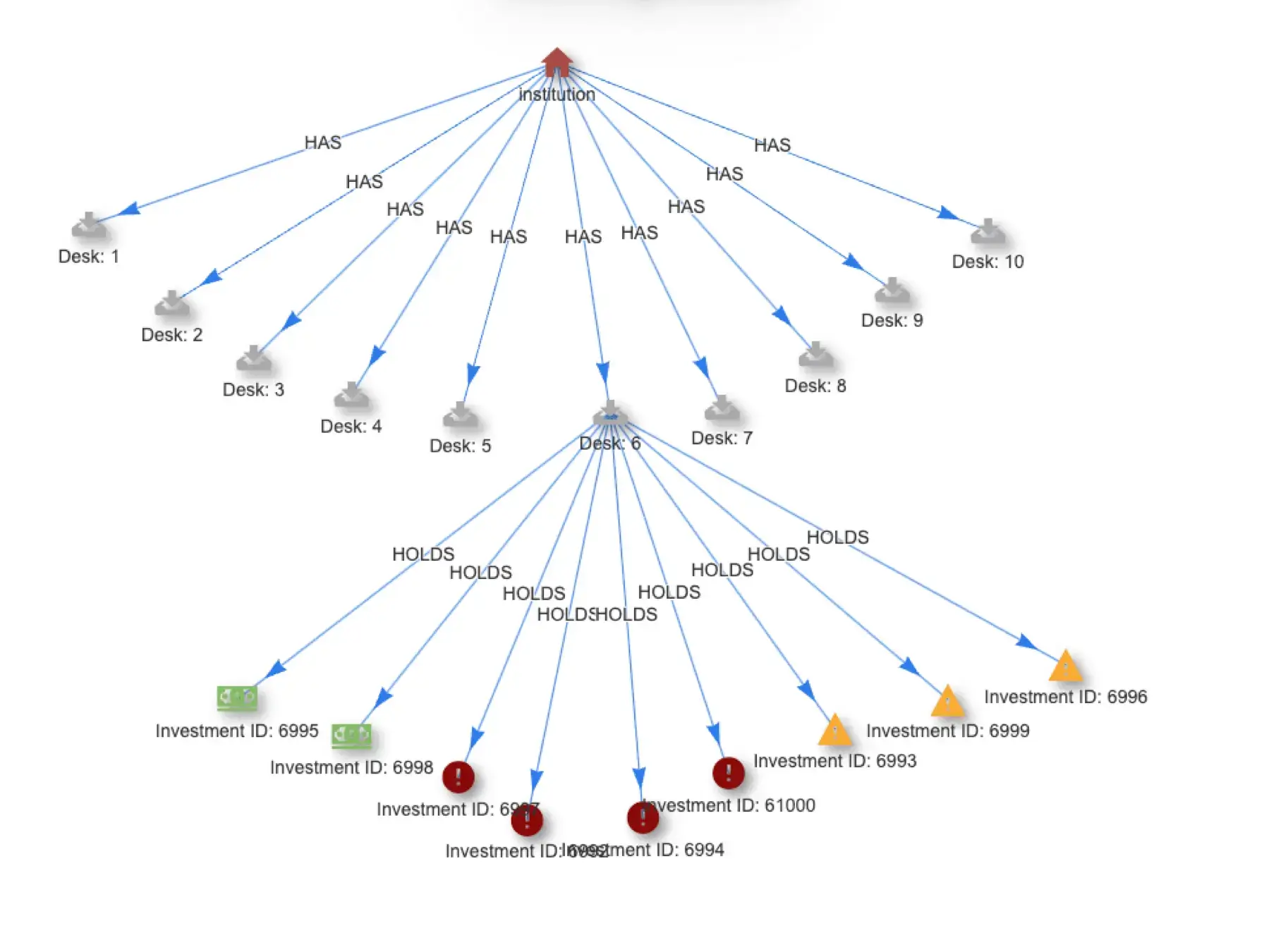

Such systems, streaming graphs, have evolved in recent years and combine the complex event processing capabilities of Flink and ksqlDB with the powerful data structures popularized by Neo4J, a traditional graph database. Going real time, in this case, means more than just processing data as it streams in, though. For digital twins to be truly useful, they must be able to drive actions — for example, issue alerts or power down equipment — the instant an issue emerges, perhaps even beforehand.

Build a real-time, streaming asset graph

New streaming graph systems have evolved to embed query logic and compute resources in line with data flows. They act like nets stretched across the data stream to capture interesting patterns as they race past and trigger workflows where instant matches occur. If our goal is to streamline and make our data processing more precise, digital twins need to be graph and real time. The best way to do this is by embracing and engaging with streaming graph, which combines event stream processing with the ability to query graph data.

The volume of events being handled in the physical world must be translated into tangible and usable data. By making digital twins real time and graph, we can take the training wheels off this area of AI/ML and allow it to run at its highest potential for maximum business impact. ThatDot makes the only technology that combines event processing with graph (graph data pipelines is a simple way to describe it) and, as such, is tailor made for digital twins.

Build Your Own Digital Twin with thatDot

thatDot software is available in both open source Quine and commercial Streaming Graph. You can try it yourself. Learn how to ingest your own data and build a streaming graph that can detect all sorts of changes and problems in real time.

- Try Streaming Graph free for yourself.

- Learn more about thatDot Streaming Graph.

- Join the Quine Discord Community and get help from thatDot engineers and community members.

- Check out the Ingest Data into Quine blog series covering everything from ingest from Kafka to ingesting .CSV data

- Download open source Quine – JAR file | Docker Image | Github