After a decade of growth in large scale data repositories — databases, data warehouses, data lakes, even data lake houses — a perceptible shift is underway in internet architecture toward event-driven programming. This shift is being spurred on by a wide range of factors including consumer demand for real-time responsiveness, businesses seeking to personalize the customer experience to maximize engagement, and a shared desire for more effective security protections.

Sources of data to deliver on these objectives is a solved issue. The inexorable digitization of commerce, media and social experiences has turned every customer interaction into an ever-growing stream of event data from which companies wish to distill actionable insights in real time.. Extracting high confidence insights through the joining of multiple data sources to expand the context of understanding, however, remains a challenge.

Using Databases to Query Event Streams: Nobody Wins

Together, the enormous scale of data and the requirement to process it in real time poses a huge challenge to companies embracing this new event-driven paradigm. Event streaming solutions like Kafka and Kinesis have quickly evolved to organize and operationalize data streams. However, once companies have turned their data into event streams, they encounter a second problem: how to query these streams in real time. Databases have proven ill-suited for this task.

Processing such big data volumes to extract valuable insights has made it necessary to break the endless stream of events into batches just so existing databases can join data together and apply computation to it. This means waiting for batches of data load, a process that regularly takes hours. And then you are only querying these batches, or snapshots, of data. This is neither streaming or real-time.

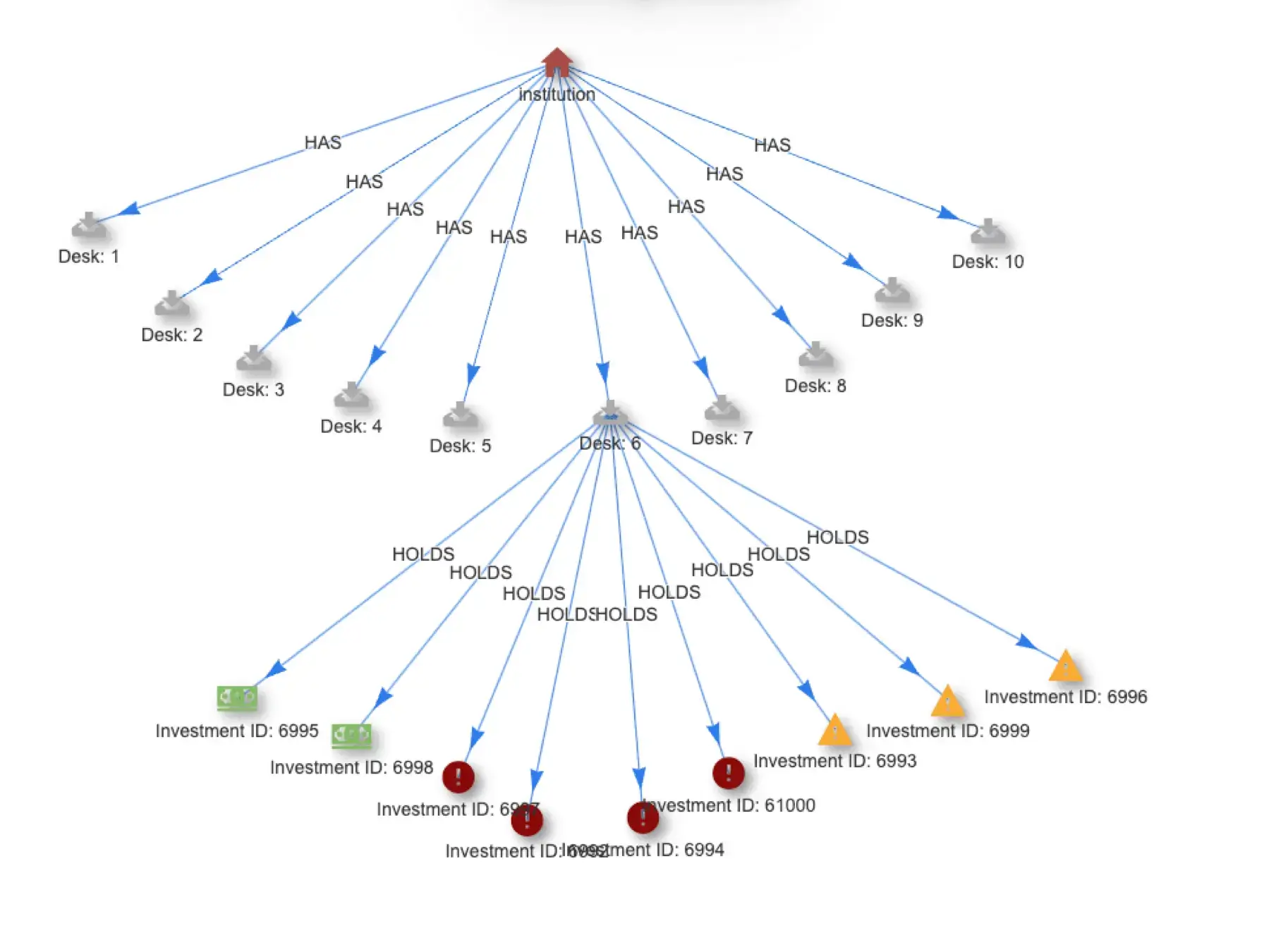

It is straightforward to translate RDBMS data into graph data structures. Quine’s property graph structure encodes relationships as edges.

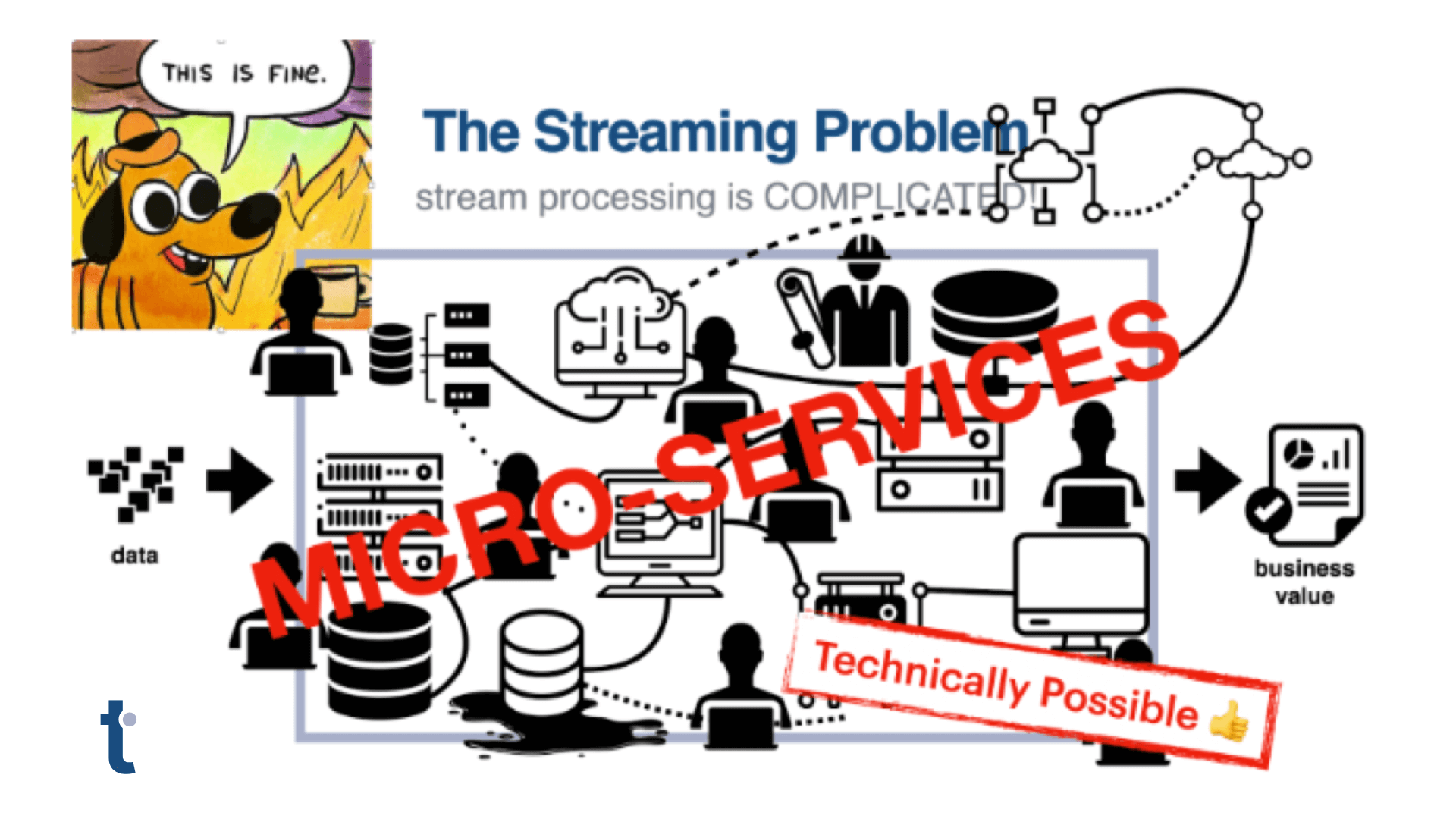

The limitations of databases manifest in the enterprise as complex systems composed of various libraries and services tied together with custom code deployed as micro-services to cross the chasm between high-throughput event streaming platforms and the lower-throughput but higher value operations of databases.

Data engineers have been quite clever in developing sophisticated solutions to deal with these challenges. The typical solution is a complex set of micro services that allows for agile adaptation to new constraints as they surface, but result in an application stack that is difficult to support and which requires expert consultation to manage or change. This complexity inevitably leads to a rebuild every 18-24 months: a poor investment and solution.

Event Stream Processing Addresses only Half the Problem

To query the event streams in real time, developers had to turn to event stream processing systems like Flink and Spark. They make it possible for developers to use familiar SQL syntax to query the event streams. And while this involved some tradeoffs like time windows, in many regards event stream processing systems have been quite successful. Many can scale to process millions of events per second.

But these systems are designed for the relational data model, which while pervasive in industry, lacks the expressive query structures needed to find complex patterns in streams. And it is in the detection of these complex patterns that the real power of event stream processing lies.

So what are we left with? Graph databases, which are well-suited for finding complex relationships with large data sets at rest, were not designed for real-time event data streams. They simply can’t keep up. And event stream processing systems lack the ability to query for the complex relationships and to do so without resorting to time windows.

Enter Streaming Graph

There is a better way: the streaming graph. Streaming graph is a variety of “streaming databases,” an emerging class of software designed specifically to process infinite streams of data. Quine streaming graph brings together the scalability of event stream processing systems with the ability to query for complex relationships offered by graph databases.

Quine streaming graph adds the scalability of event stream processing systems to graph.

Instead of trying to engineer around the shortcomings of graph databases. Quine’s streaming graph technology includes some important innovations:

- Uses a graph data model to understand the relationships between data natively, without the need for joins, nested joins and foreign key management

- Continuous and incremental application of queries to newly arriving data, eliminating the need for time windowing

- Distributes and parallelizes read and write operations to high-throughput and low-latency queries at scale

Quine streaming graph offers teams a simple, drop-in solution capable of ingesting high volume Kafka or Kinesis data streams with sub-millisecond query performance, even when the query involves deep graph traversal. Quine also eliminates time-windows and makes it possible to handle out of order and late-arriving data that is a common limitation of other event-stream processing systems.

Streaming graph fills the architectural gap that exists between high-volume event streaming and high-value graph database computation. The combination of a native understanding of data relationships with high volume event processing provides a new tool for realizing the real-time use cases event-driven programming is meant to enable. Graph AI techniques can now come out of the lab and drive the next generation of recommendations, root-cause analysis, fraud and security threat detection in production.

Learn more about Quine streaming graph, available both in open source and enterprise editions, at www.thatdot.com