Recipe for Streaming Graph Success

Quine Recipes Make Getting Started Easy

In the world of infrastructure software, there is a certain cachet associated with standing up and operating vast, complicated systems. Like tearing down and rebuilding a motor or supercharging your 3D printer, the challenge appeals to the engineer’s mind.

At least until the third or fourth time you have redeploy one of those complex systems because of a particularly pernicious gremlin. That’s when you start asking yourself (usually at around 3 am the night before launch) why can’t someone make a distributed system that is both easy to deploy and designed to scale up to production workloads.

This is the exact question that led to the creation of Quine streaming graph and, more recently, the introduction of Quine recipes.

Intro to Quine Streaming Graph Recipes

A recipe, in simple terms, is a (YAML or JSON) document containing all the information Quine needs to execute any batch or streaming data processing task. It is referenced when invoking Quine and is often used for modeling, development and testing on local systems. Here’s an example of a recipe that creates a graph by ingesting each line in “$in_file” as graph node with property “line”:

version: 1

title: Ingest

contributor: The thatDot Team

summary: Ingest input file lines as graph nodes

description: Ingests each line in "$in_file" as graph node with property "line".

ingestStreams:

- type: FileIngest

path: $in_file

format:

type: CypherLine

query: |-

MATCH (n)

WHERE id(n) = idFrom($that)

SET n.line = $that

standingQueries: [ ]

nodeAppearances: [ ]

quickQueries: [ ]

sampleQueries: [ ]

Pretty simple.

But don’t underestimate the extensibility of Recipes. Using the same simple template as this recipe, you can configure Quine to ingest and process multiple event streams, build highly-connected graphs, and set up standing queries that do everything from handling out-of-order and late arriving data to writing results back into the graph or out to Kafka topics.

And once you’ve constructed your recipe, everyone on your team has a handy reference for what you’ve built. This is especially useful for recurring tasks, like log processing (see the Apache Access Log recipe) or for teams that are growing or that want to maintain continuity as people come and go. Did I mention that you can embed comments in your recipes?

Recipes are also a great way to contribute back to the community. For example, community member Alok Aggarwal, contributed a recipe for calculating CDN cache efficiency that is already among the most popular on the Quine site.

Best part: once you’re satisfied with the recipe, it can be pushed to a production system via the Quine RESTful API.

Anatomy of a Quine Streaming Graph Recipe:

To develop a recipe that is executable from a command line, you may use the following YAML template as a starting point:

version:

title:

contributor:

summary:

description:

ingestStreams: []

standingQueries: []

nodeAppearances: []

quickQueries: []

sampleQueries: []

statusQueries: []

The first five items – version number, title, author (you, the contributor), as well as summary and description (optional but nice to have) – are pretty self explanatory. If you plan to submit the recipe to Quine.io, the optional fields should be filled in to provide the community with context for your recipe, and any details such as data source and output formats.

The next two sections – ingestStreams and standingQueries define your recipe’s behavior.

Ingesting and Modeling Data in the Streaming Graph

The first query type we will build is an Ingest Stream. Information in the Ingest Stream provides everything Quine needs to find and consume data in order to build a streaming graph.

Quine was specifically designed to handle the demands of high volume streaming data. You can use recipes to ingest from Kafka, Kinesis, and SNS/SQS.

In addition to event streaming sources, you may ingest data from files, named pipes, and stdin. In the simple Ingest example above CypherLine indicates the source is a file.

Data ingested from a file is read into the system line by line, from a functional perspective, behaving just like a stream when consumed. The only difference is that a file is automatically read into the system as fast as the system can handle, and a stream may be rate limited by the incoming data.

Standing Queries: Quine’s Superpower

Now that we have data ingested into the graph, we should do something with it (although you don’t have to). Let’s set up a standing query.

Standing queries persist in the graph, waiting until a query condition is matched, triggering an action (e.g., updating the graph, executing code, or writing to Kafka). Standing queries are definitely worth mastering.

WIth every standing query, we have to provide two things. First, we need to provide the pattern for the system to match, then describe the action we want it to take in the form of a query output.

The simple example we started with doesn’t include a standing query so let’s take a look at the one from a another recipe (the Ethereum recipe), which propagates the tainted flag along outgoing transaction paths:

standingQueries:

- pattern:

query: |-

MATCH (tainted:account)<-[:from]-(tx:transaction)-[:to]->(otherAccount:account),

(tx)-[:defined_in]->(ba:block_assoc)

WHERE

tainted.tainted IS NOT NULL

AND NOT EXISTS (ba.orphaned)

RETURN

id(tainted) AS accountId,

tainted.tainted AS oldTaintedLevel,

id(otherAccount) AS otherAccountId

type: Cypher

mode: MultipleValues

outputs:

propagate-tainted:

query: |-

MATCH (tainted), (otherAccount)

WHERE

tainted <> otherAccount

AND id(tainted) = $that.data.accountId

AND id(otherAccount) = $that.data.otherAccountId

WITH *, coll.min([($that.data.oldTaintedLevel + 1), otherAccount.tainted]) AS newTaintedLevel

SET otherAccount.tainted = newTaintedLevel

RETURN

strId(tainted) AS taintedSource,

strId(otherAccount) AS newlyTainted,

newTaintedLevel

type: CypherQuery

andThen:

type: PrintToStandardOut

Optional Recipe Elements to Customize the Experience

Now that you’ve ingested and are querying data, you can use the remaining parameters to customize the user experience.

nodeAppearances: [] use to customize the web exploration UI

quickQueries: [] use to add queries to node context menus in web exploration UI

sampleQueries: [] use to customize sample queries listed in web UI

statusQueries: [] specifies a Cypher query to be executed and reported to the Recipe user

Try the Ethereum Tag Propagation Recipe

If you are interested in learning more about recipes, there’s no better way than to try one for yourself. I recommend the Ethereum Tag Propagation recipe because it uses actual live data and its use case – detecting tainted transactions on the blockchain – is more relevant by the day..

And if you are interested in creating your own recipe, here are some additional reference resources to get you started:

Thanks for taking the time to read this and bon appétit!

Related posts

-

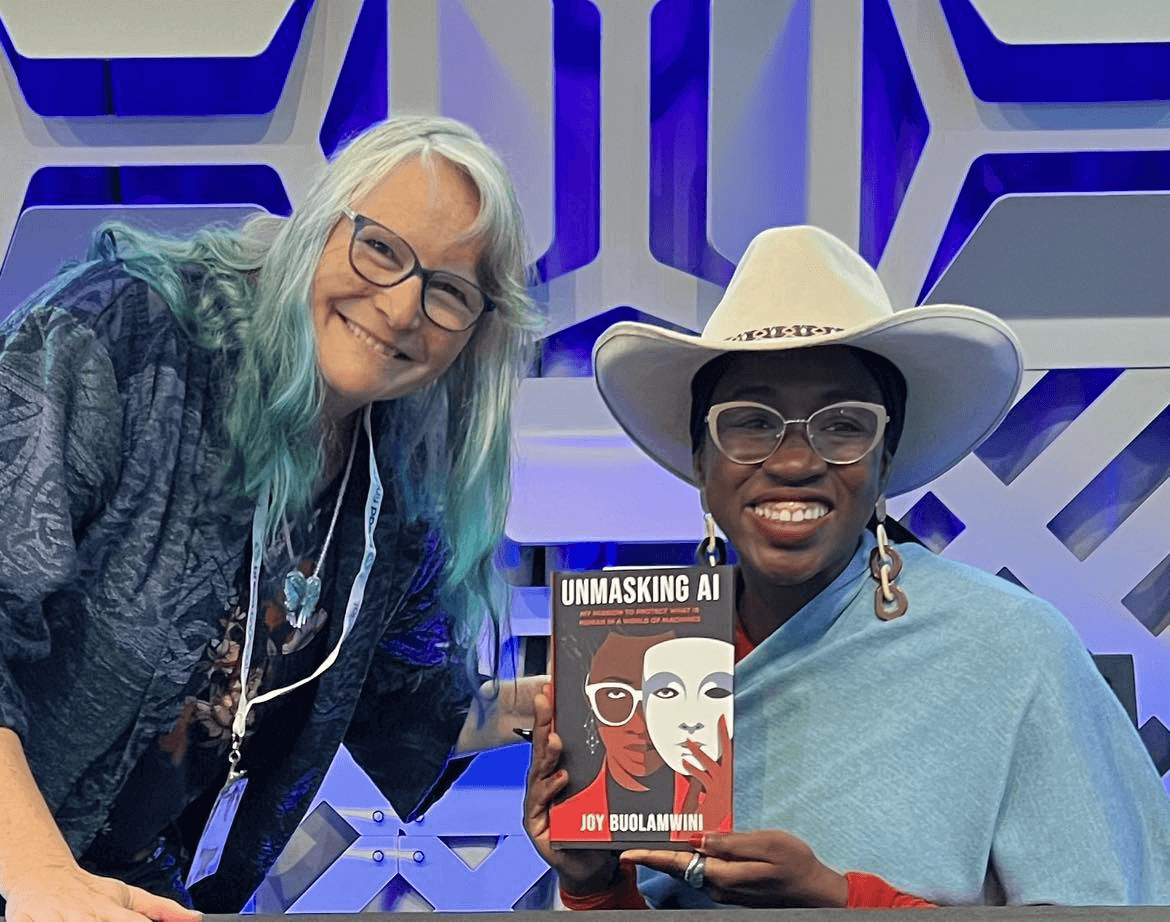

Stream Processing World Meets Streaming Graph at Current 2024

The thatDot team had a great time last week at Confluent’s big conference, Current 2024. We talked to a lot of folks about the power of Streaming Graph,…

-

Streaming Graph Get Started

It’s been said that graphs are everywhere. Graph-based data models provide a flexible and intuitive way to represent complex relationships and interconnectedness in data. They are particularly well-suited…

-

Streaming Graph for Real-Time Risk Analysis at Data Connect in Columbus 2024

After more than 25 years in the data management and analysis industry, I had a brand new experience. I attended a technical conference. No, that wasn’t the new…