Just the numbers, please

- Achieved a sustained rate of recording 425,000 records per second into our streaming graph

- We ran 64 thatDot Quine Enterprise hosts on AWS c5.2xlarge instances (the most important feature being the 8 CPU cores per host)

- This was supported by 15 Apache Cassandra hosts on m6gd.4xlarge instances

- The total costs were less than $13/hour to run at full scale using reserved instances

Why spend the time?

thatDot’s enterprise version of Quine is designed to be a web-scale stream processing and data store solution. We’re always developing with an eye toward performance and wanted to demonstrate the linear scaling the system is built to deliver.

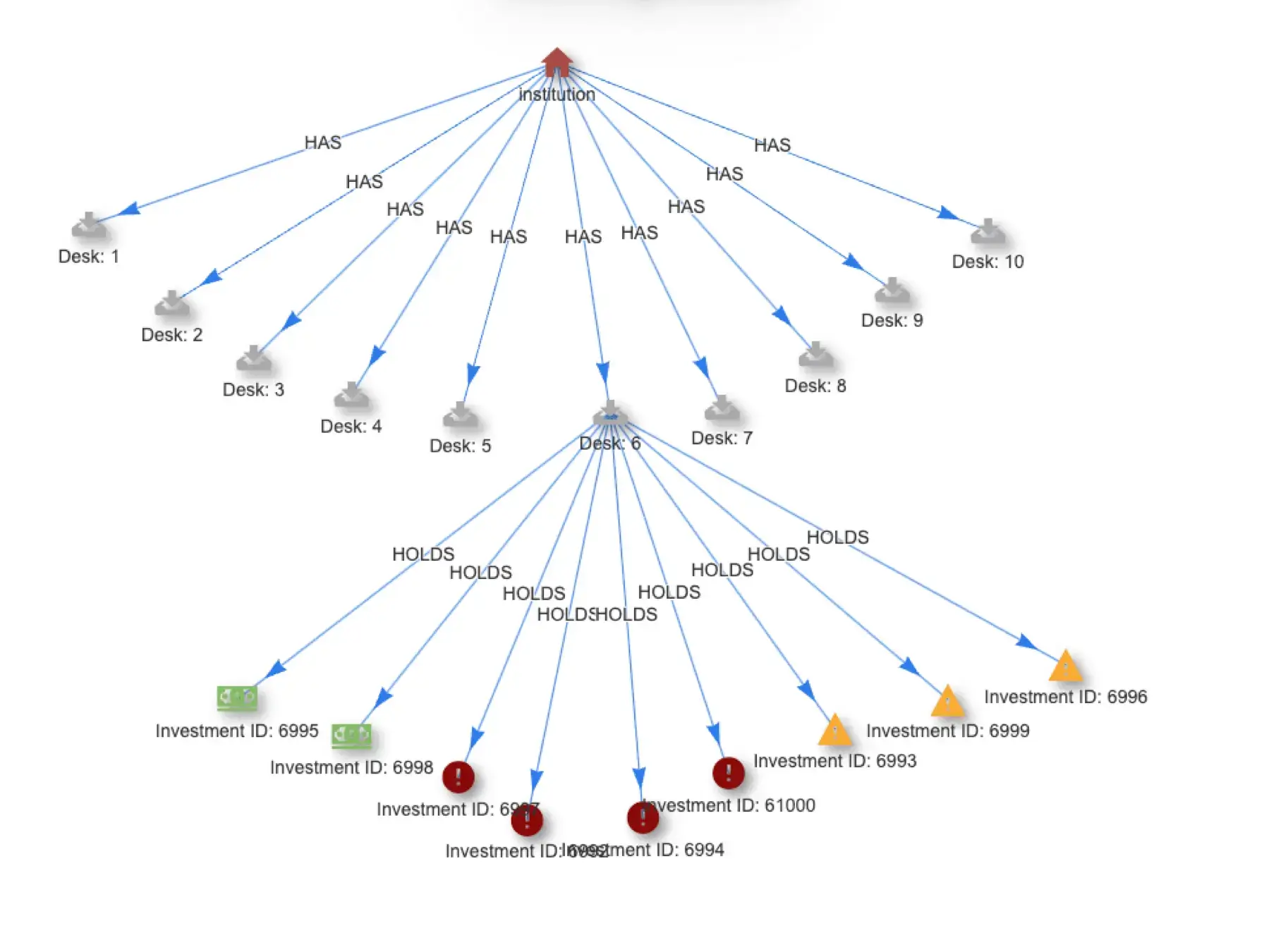

The architecture of Quine takes some interesting twists on traditional data management systems. For example, each node in a Quine datastore is capable of performing its own computation. This makes Quine hugely parallel, meaning that in practice, we can just about always make use of every part of every core on a host machine. Furthermore, we take advantage of deterministic entity resolution to ensure that regardless of how much data Quine has already ingested, each additional record takes roughly the same amount of time to process, analyze, and store.

Starting the scaling effort

To start we set a goal of processing 250,000 records per second. If you ask me, that’s a lot of data. If you ask our lead Sales Engineer Josh, he’ll just laugh and say, “I’ve seen worse”. Regardless, it’s a nice round number that’s easy to talk about and do math with. Great for benchmarking. For our dataset, we generated a simulated log of process creation events, like you might find reported by an intrusion detection system. Each record had 9 fields of varying types.

Our plan was to create a node in Quine for each process event, creating a property on the node for each field, and linking each process to its parent via an edge. Each record was protobuf-encoded, to keep the [de]serialization simple but nontrivial, and to reduce how large the Kafka topic’s storage would need to be.

We started small, with just 4 hosts, each a c5.2xlarge. We got to a moderate, but respectable, 30,000 records per second ingested, from Kafka to Cassandra. That’s 7,500 records per second per host. Once we had played around a bit, we doubled the cluster size, up to 8 hosts. Accordingly, our ingest rate doubled to creating 56,000 nodes per second.

The big clusters

With the Quine application smoothly performing on 8 hosts, we decided to quadruple the cluster size to 32 hosts, expecting to see our pattern of linear scaling continue. Unfortunately, instead of the 220,000 records per second we expected, we saw only 144,000. Strangely, the 144,000 figure wasn’t stable — the cluster constantly fluctuated in ingest rate. We were momentarily baffled, until we realized that in our excitement, we had forgotten to increase Cassandra’s size proportionally to the new Quine cluster size. The Quine graph will back-pressure when some system components, like the Cassandra data storage layer, cannot keep up. Back-pressuring keeps the overall system stable instead of overwhelming the now-underpowered data storage layer. The result was a disappointing-but-oddly-beautiful initial performance graph.

Once we realized that the Cassandra JVM was scrambling to keep up with the memory pressure of a 32-host Quine Enterprise cluster, we had no trouble increasing the Cassandra cluster to use more hosts, and bigger hosts. By kicking Cassandra up from using 9 2xlarge instances to 15 4xlarge, we had more than enough capacity to run Quine at-scale. We hit our 220,000 records per second hypothesis without any trouble. The cluster smoothly maintained its pattern of linear scaling, ingesting a more or less constant 220,000 events per second, creating nodes and edges. We were so close to hitting our goal of 250,000 records per second. We probably could have added just a few more hosts to reach the goal. After all, we had every indication that every host added to the cluster would add around 7,000 records per second to the cluster’s ingest rate.

We did not add just a few more hosts. We doubled the cluster size instead, hitting an EC2 resource limit imposed by AWS, but once clear of that hurdle it was smooth sailing and everything was working as hoped!

We kicked off the ingest on each of the 64 clustered thatDot Quine hosts, and immediately, it was clear we’d far exceeded our goal.

Linear Scaling!

Linear scaling to 64 hosts had been achieved. Our initial target of 250,000 records per second was exceeded, and we maintained an ingest rate of over 425,000 log events per second, each creating a node with several properties, and most creating at least one edge to connect them into a graph. That’s over 1.1 trillion events a month.

The work continues

Of course, we’re not done. We’re always working to explore what can be done with stream processing systems at large scales, and we’re excited to offer our products to support others in their explorations.