With thatDot you can resolve entities, filter out noise, and save on database costs

With thatDot event stream processing platform, you can combine data streams from multiple sources, find and resolve the duplicated entities across them, transformation and enrich data, do deep analytics, and anomaly detection all in real time, before the data comes to rest in a database. This holistic approach enhances data pipelines, ensuring high-quality, actionable data that drives better business outcomes. It also filters out mountains of useless noise, allowing you to put all the important signals in your database, without spending a ton on cloud database infrastructure.

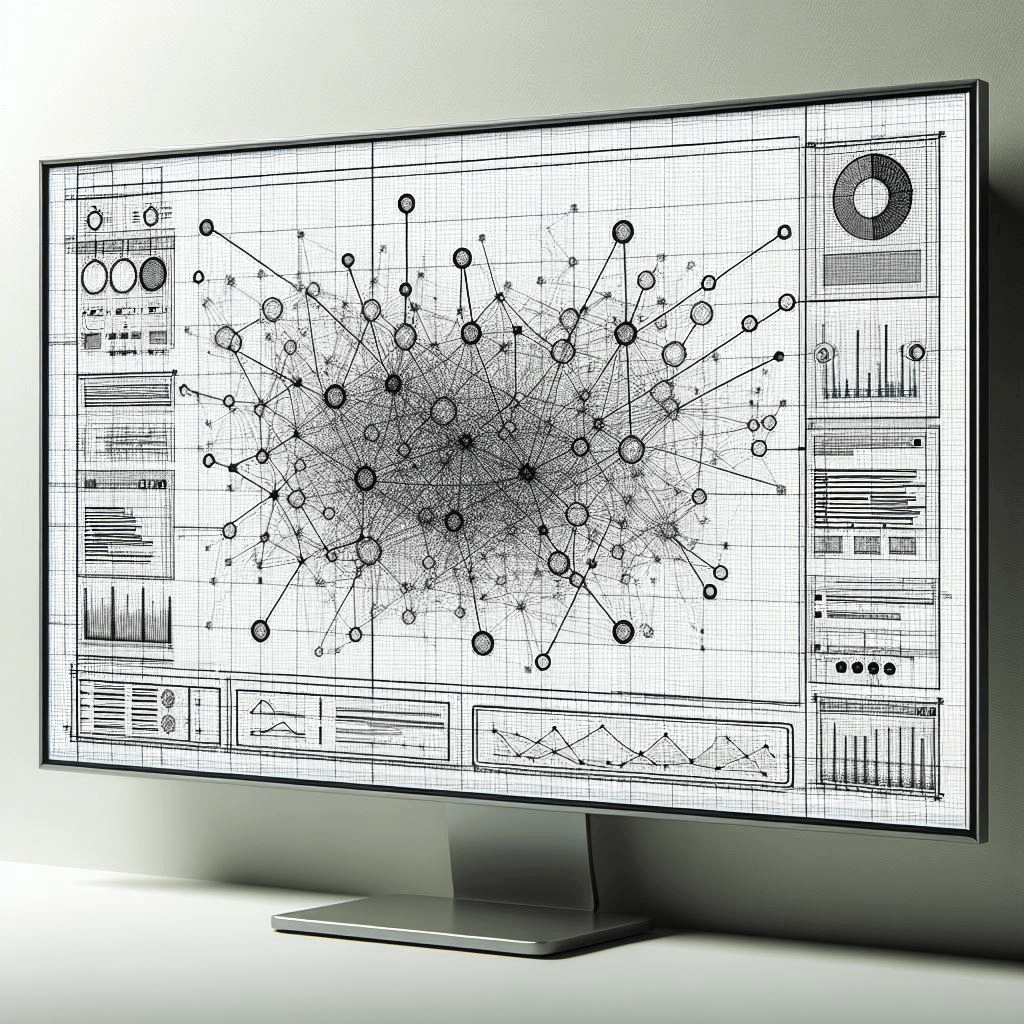

Real-time Data Pipeline Processing

thatDot excels in processing data in real-time, and drops right into your existing data pipeline, whether your data is streaming in Kafka, or batch in a file, or more commonly, both. This capability ensures that data is always up-to-date and available for analysis, supporting timely decision-making and operational efficiency.

Efficient Handling of High-Volume Data

Smart filtering of your data means dealing with massive volumes from multiple streams, including machine logs and sensor data that swamp technology designed for mere human-generated data levels. thatDot’s powerful stream processing engine has been proven to manage and process high-throughput data with no upper limit found, ensuring that the data pipeline remains performant and scalable, even as data volumes grow.

Support for a Wide Variety of Data Sources

thatDot’s platform supports integration with a wide range of streaming and batch data sources and destinations, allowing for seamless data flow between disparate systems. This flexibility ensures that data from various formats and origins can be consistently transformed, maintaining data integrity and coherence.

Use Cases

-

Streaming Graph ETL

The Problem Most ETL tools use the batch processing paradigm to find high-value patterns in large volumes of data. Whether the specific business application is fraud detection, cyber…

-

Stateful Digital Twin

The Problem While digital twins and the emerging subcategory of asset graphs promise operators greater visibility into the relationships between IT assets and equipment under management, current approaches…